Survey Bias: 7 Types That Ruin Your Data (And How to Avoid Them)

Ankur Mandal

Ankur MandalYou just spent three weeks collecting survey responses. Your team invested $15,000 in a market research panel. You got 500 responses back. The numbers look good on paper.

Then you launch the product, and nobody buys it.

What happened? Survey bias happened.

Survey bias is like asking for restaurant recommendations from people who only eat at fancy steakhouses. Sure, you'll get answers. But those answers won't help you if you're trying to open a taco truck.

The problem is bigger than most marketers realize. Survey response rates have crashed from 30% to just 12% over the past decade. That means 88 out of every 100 people you try to survey simply ignore you. And the 12 who do respond? They're often not representative of your actual customers.

Let's break down the seven types of survey bias that are quietly sabotaging your data, and explore a better way forward.

The 7 Types of Survey Bias Killing Your Insights

1. Selection Bias: You're Asking the Wrong People

Selection bias happens when your survey sample doesn't match your actual customer base.

Think about it like this: if you want to know what Americans think about coffee, but you only survey people at Starbucks, you're missing everyone who drinks Dunkin', makes coffee at home, or doesn't drink coffee at all.

Real example: A fitness brand surveys their email list about new product ideas. But their email list only includes customers who already bought something. They're missing potential customers who haven't purchased yet, which is exactly who they need to understand.

Traditional fix: Use random sampling and recruit from multiple sources.

The problem: Even with careful planning, you can't force people to respond. The people who do respond are self-selecting, which brings us to the next bias.

2. Non-Response Bias: The Silent Majority Stays Silent

Non-response bias occurs when the people who skip your survey are fundamentally different from those who complete it.

Here's a simple example: busy executives rarely complete surveys because they don't have time. So when you survey "business decision makers," you're really surveying the less busy ones. The insights? Probably not what your actual target thinks.

Traditional fix: Send reminder emails, offer incentives, keep surveys short.

The problem: Response rates keep dropping anyway. With only 12% responding, you're building strategy on a tiny, possibly unrepresentative slice of your audience.

3. Social Desirability Bias: People Lie to Look Good

People don't want to look bad, even to strangers in a survey.

Ask someone if they recycle, and 80% will say yes. Check actual recycling rates? Closer to 32%. Ask if they eat healthy? Everyone's suddenly a nutritionist. Check their shopping cart data? Frozen pizza and energy drinks.

This is social desirability bias. People answer based on what sounds good, not what's true.

Traditional fix: Use anonymous surveys, phrase questions neutrally, focus on behaviors instead of attitudes.

The problem: People still know they're being watched. There's a 40-50% gap between what consumers say they'll do and what they actually do.

4. Question Order Bias: Earlier Questions Poison Later Ones

The order you ask questions changes how people answer them.

If you ask "How satisfied are you with our customer service?" right after asking "Did you have any problems with your recent order?", you've just reminded them of problems. Their satisfaction score drops.

It's like asking someone "How's your day going?" right after saying "Did you hear about that terrible news story?" You've already set the mood.

Traditional fix: Randomize question order, put sensitive questions at the end, A/B test different sequences.

The problem: You can reduce this bias but never eliminate it. Every survey still creates its own psychological context.

5. Leading Questions: You're Putting Words in Their Mouths

Leading questions guide respondents toward a specific answer.

"How much do you love our innovative new feature?" assumes they love it. "What improvements would you make to our disappointing checkout process?" assumes it's disappointing.

Even subtle word choices matter. "Would you consider purchasing..." gets different responses than "How likely are you to buy..."

Traditional fix: Write neutral questions, have someone outside your team review the survey, pre-test with a small group.

The problem: It's hard to be objective about your own product. Even well-intentioned marketers accidentally bias their questions.

6. Response Bias: Professional Survey Takers Game the System

Some people take surveys for a living. They join multiple panels, complete dozens of surveys monthly, and learn exactly what answers move them through surveys fastest to get their $5 gift card.

These professional respondents don't represent real customers. They represent people who are really good at taking surveys.

Traditional fix: Screen out frequent survey takers, use attention check questions, limit panel usage.

The problem: Research panels are getting harder to trust. The same people cycle through multiple platforms, and they've learned to spot and pass attention checks.

7. Acquiescence Bias: People Just Agree with Everything

Some respondents tend to agree with statements regardless of content. This is acquiescence bias, and it's more common than you'd think.

Ask "Do you prefer fast shipping?" They say yes. Ask "Do you prefer to save money even if shipping takes longer?" They also say yes. Both can't be equally true, but survey data shows they are.

Traditional fix: Mix positive and negative statements, use forced-choice questions, vary answer formats.

The problem: This helps, but doesn't solve the underlying issue that surveys measure what people say, not what they do.

Why Traditional Solutions Fall Short

Notice a pattern? Every traditional fix helps a little but doesn't solve the core problem.

Traditional surveys have three unfixable limitations:

First, people aren't reliable reporters of their own behavior. There's always a gap between stated intentions and actual actions.

Second, survey quality depends on who responds. With 88% of people ignoring surveys, you're always working with a self-selected sample.

Third, survey fatigue is real. The more surveys people see, the worse the quality of responses becomes. People rush through, contradict themselves, or drop out halfway.

You can optimize your survey design perfectly and still end up with biased, unreliable data.

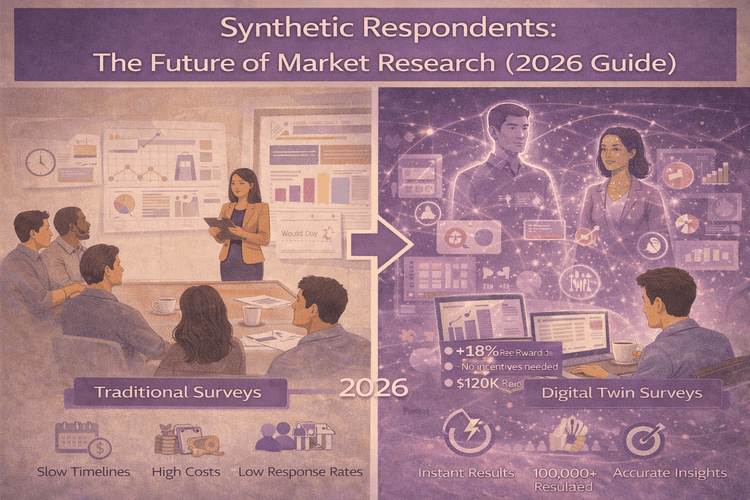

The Digital Twin Solution: Eliminating Bias at the Source

What if instead of trying to reduce bias, you could eliminate entire categories of it?

That's what AI consumer digital twins do.

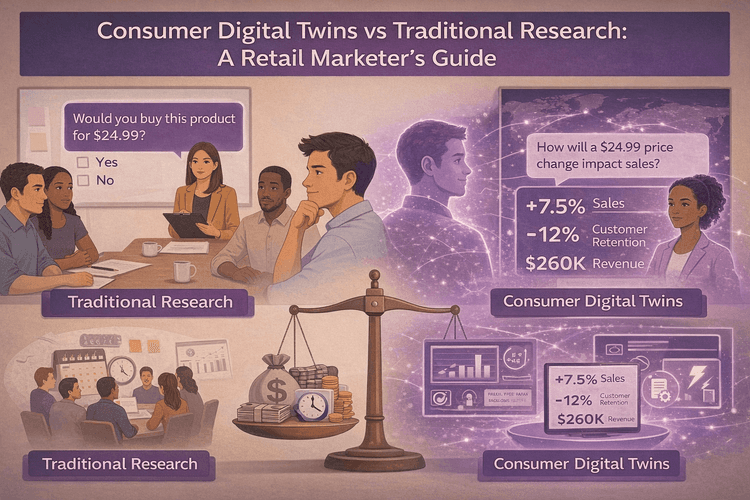

DoppelIQ Atlas creates behavioral simulations of 100,000 real US consumers using actual survey data, purchase patterns, demographic information, and lifestyle signals. Instead of asking people what they might do, you're simulating what they actually would do based on how similar people have behaved.

Think of it like a flight simulator for consumer research. Pilots train in simulators that respond exactly like real planes, based on physics and real flight data. DoppelIQ Atlas works the same way for consumer behavior.

Here's how it eliminates each major bias:

No More Non-Response Bias

The problem: Only 12% of people respond to surveys.

Atlas solution: 100% participation rate. Every one of the 100,000 consumer profiles responds. You get complete representation across all demographics, not just from the 12% willing to click your survey link.

No More Social Desirability Bias

The problem: People say they'll buy organic but purchase conventional.

Atlas solution: AI Digital twins respond based on actual behavioral patterns, not what sounds impressive. They're built on what people do, not what they say they do. This closes that 40-50% gap between intentions and actions.

No More Professional Respondent Bias

The problem: The same people take surveys repeatedly and learn to game them.

Atlas solution: No repeat survey takers. No one's earning gift cards by rushing through questions. Every response comes from a behaviorally accurate simulation, not someone trying to finish fast.

No More Response Quality Issues

The problem: Survey fatigue leads to contradictory answers and dropouts.

Atlas solution: AI Digital twins don't get tired, don't speed through questions, and don't contradict themselves. You can run unlimited tests without degrading data quality.

No More Selection Bias

The problem: You can't control who chooses to respond.

Atlas solution: You choose exactly which segments to survey from 100,000 representative profiles. Want suburban moms aged 35-44 who shop at Target? Done. Want urban millennials who buy sustainable products? You got it. Complete control over sample selection.

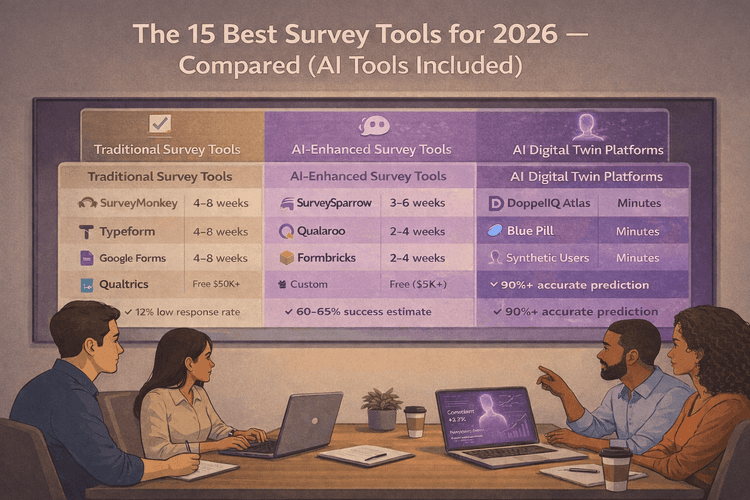

Traditional Surveys vs. DoppelIQ Atlas: The Real Difference

Here's how the approaches compare when dealing with survey bias:

| Bias Type | Traditional Survey Method | The Limitation | DoppelIQ Atlas Solution |

|---|---|---|---|

| Non-Response Bias | Send reminders, offer incentives, keep surveys short | Still only get 12% response rate | 100% participation from all 100,000 consumer profiles |

| Social Desirability Bias | Use anonymous surveys, neutral language | People still answer what sounds good | Based on actual behavior patterns, not stated preferences |

| Selection Bias | Random sampling, recruit from multiple sources | Can't control who actually responds | Fully controlled sample selection from representative profiles |

| Professional Respondent Bias | Screen out frequent survey takers | Same people cycle through multiple panels | No repeat panelists, every twin is fresh |

| Response Quality | Use attention checks, limit survey length | Survey fatigue still degrades answers | Digital twins never get tired or contradict themselves |

| Question Order Bias | Randomize questions, A/B test sequences | Every survey still creates psychological context | Consistent behavioral responses regardless of question order |

| Leading Questions | Write neutral questions, pre-test surveys | Hard to be objective about your own product | Responses based on data patterns, not question phrasing |

Bottom line: Traditional methods try to reduce bias. DoppelIQ Atlas eliminates entire categories of it.

Real Results: Minutes Instead of Months

Here's what this looks like in practice:

A beauty brand wants to test two different product concepts before investing in manufacturing.

Traditional approach: Commission a research study, wait 4-6 weeks, spend $25,000, get 300 responses with unknown biases, hope the data is reliable.

DoppelIQ Atlas approach: Ask the question in plain English ("Which concept resonates more with women 25-40 who buy premium skincare?"), get responses from relevant AI digital twins in minutes, test unlimited variations, spend 90% less.

The accuracy? DoppelIQ Atlas shows 80% correlation with real consumer outcomes. That's not perfect, but it's better than biased survey data that looks scientific but leads you in the wrong direction.

Who This Works For

DoppelIQ Atlas is built for marketing and insights teams who need fast, reliable answers without the bias headaches of traditional surveys.

Perfect if you're:

-

Testing campaign messages before spending media budget

-

Validating product concepts before manufacturing

-

Understanding market segments without waiting weeks

-

Getting consumer insights without hiring a research firm

You don't need data science skills. You ask questions in normal English, like "Would young parents pay extra for organic baby food?" The platform handles the complex stuff behind the scenes.

Getting Started

Survey bias isn't going away. Response rates will keep dropping. People will keep telling you what sounds good instead of what's true. Professional respondents will keep gaming your panels.

You can keep fighting these biases one survey at a time, or you can eliminate them at the source.

Want to see how bias-free insights change your decision-making? Compare different consumer research solutions or get started with DoppelIQ Atlas today. No credit card required, and you'll get your first insights within minutes of signing up.

Because the best way to avoid survey bias is to stop relying on surveys that were broken from the start.

Ready to Eliminate Survey Bias From Your Research?

Stop guessing what your customers want. Start knowing what they'll actually do.

✓ No credit card required ✓ 100,000 AI consumer digital twins ready to survey ✓ Get your first insights in minutes ✓ 80% accuracy validated against real outcomes ✓ 90% cost savings vs traditional research

Join marketing teams who've already ditched biased surveys for behavioral truth.

Related Articles

How beauty brands use digital customer twins to get insights and win in a competitive segment

Ankur Mandal

Ankur MandalCustomer digital twins vs focus groups vs ChatGPT: Concept testing in market research

Ankur Mandal

Ankur Mandal