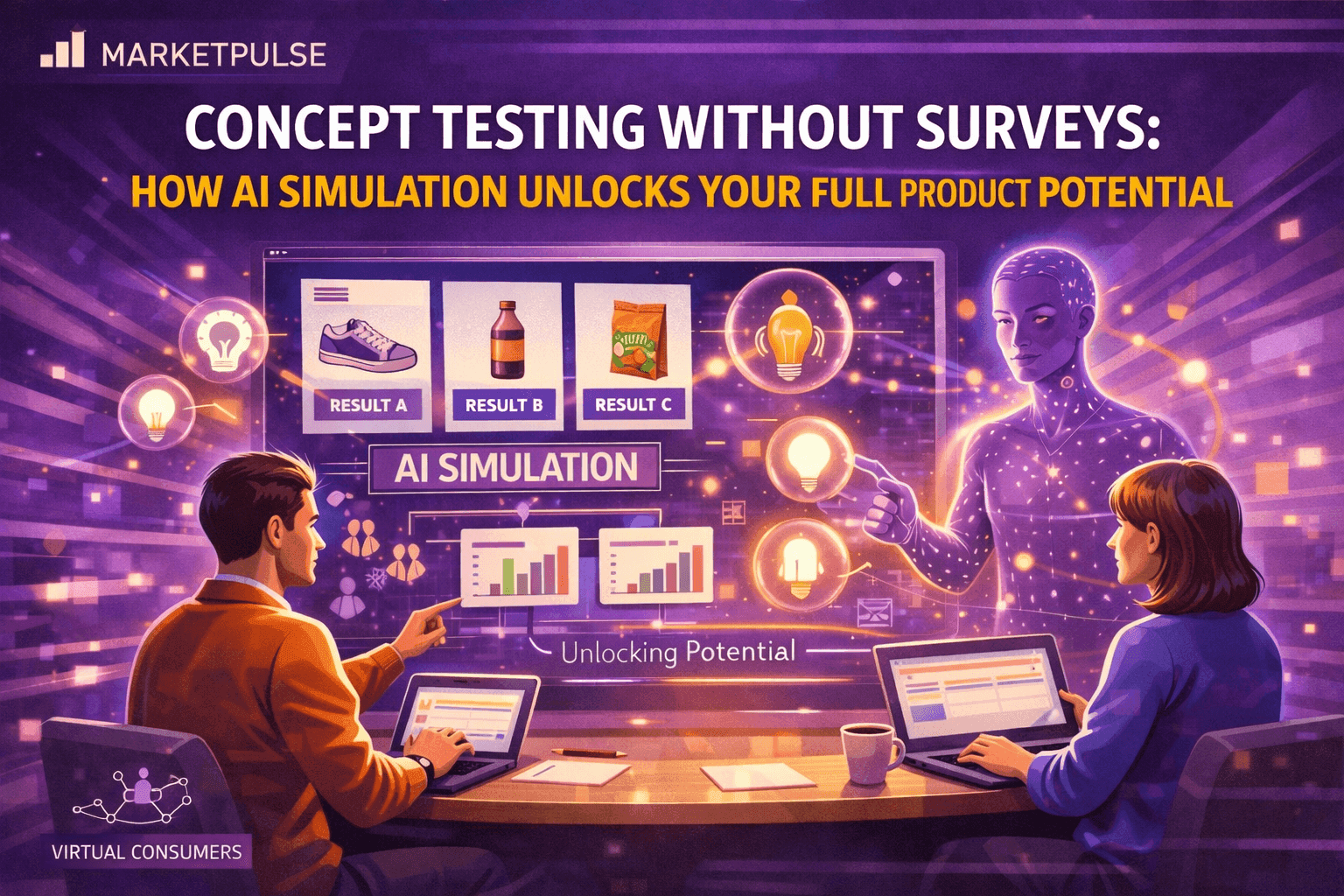

Concept Testing Without Surveys: How AI Simulation Unlocks Your Full Product Potential

Nehan Mumtaz

Nehan MumtazYou've got 10 product ideas. Your budget lets you test 2.

That's the reality for most product and marketing teams. Not because they lack creativity, but because traditional concept testing is expensive and slow. Test one concept with a proper survey? You're looking at $10,000 to $30,000 and three to four weeks of waiting. Want to test all 10 ideas? That's $100,000+ and half a year gone.

So you pick two concepts, cross your fingers, and hope you chose the winners.

Here's the thing: you're probably leaving your best idea untested.

Think of it like house hunting with a blindfold. You can only peek at two houses before you have to buy one. Sure, those two might be decent, but what about the perfect house three doors down that you never saw?

This is where AI simulation changes everything. Not as a cheaper version of surveys, but as a completely different way to test concepts that lets you explore all your ideas, not just the ones that fit your budget.

Why Traditional Concept Testing Can't Keep Up

Let's talk about what happens when you use traditional surveys for concept testing.

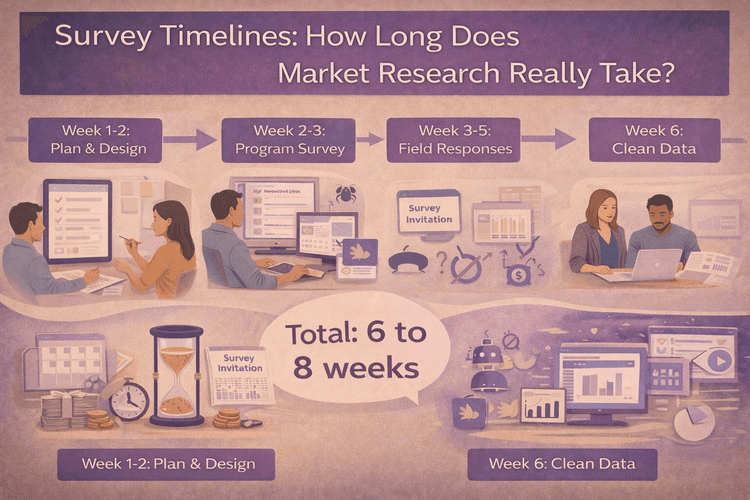

First, there's the cost problem. Every concept you test means another survey to design, another round of people to recruit, another few weeks of waiting. Survey timelines stretch out because you need to find the right people, get them to respond (which is harder than ever), and collect enough responses to mean something.

This forces an awful choice: test everything and blow your budget, or test a few things and gamble on which concepts to explore.

Most teams pick option two. They narrow down to one or two concepts based on gut feel, test those, and call it validation. But that's not really validation. That's just confirming whether your gut was right about the concepts you could afford to test.

Second, there's the iteration problem. Got feedback that says people like your concept but the price point feels high? Great! Now you need to redesign the survey, recruit new people (can't reuse the same panel), and wait another three weeks. By then, your launch window might be closing or a competitor might have moved.

Traditional concept testing treats each test like a final exam. You get one shot, then you wait for results. There's no room for the kind of rapid learning that actually builds great products.

The Hidden Trap: Early Concepts and Survey Bias

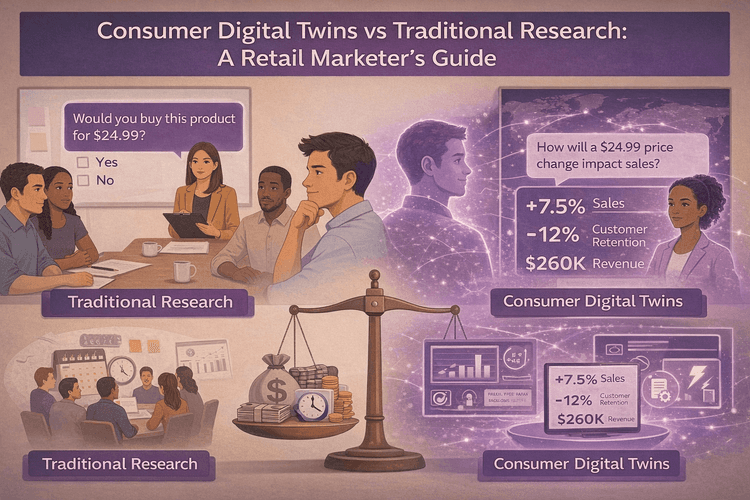

Here's something most marketers know but rarely say out loud: people are really bad at predicting what they'll actually buy.

Ask someone "Would you pay $8 for sustainable, organic coffee pods?" and they'll probably say yes. It sounds good. It makes them feel like a responsible consumer. But when they're standing in the grocery aisle looking at $5 regular pods next to $8 sustainable ones? Their actual behavior often doesn't match what they told you in the survey.

This gap between what people say and what they do gets even worse with early-stage concepts. When you're testing something new or unfamiliar, respondents don't have real experience to draw from. They're guessing. Or worse, they're telling you what they think sounds right.

This is called survey bias, and it's everywhere in concept testing. People want to sound smart, look good, or just get through your 20-question survey so they can collect their $5 incentive.

The more abstract your concept, the more bias creeps in. And concepts, by definition, are abstract. You're not testing a finished product. You're testing an idea.

So you end up with data that tells you what people think they should want, not what they'll actually buy. That's a shaky foundation for a product launch.

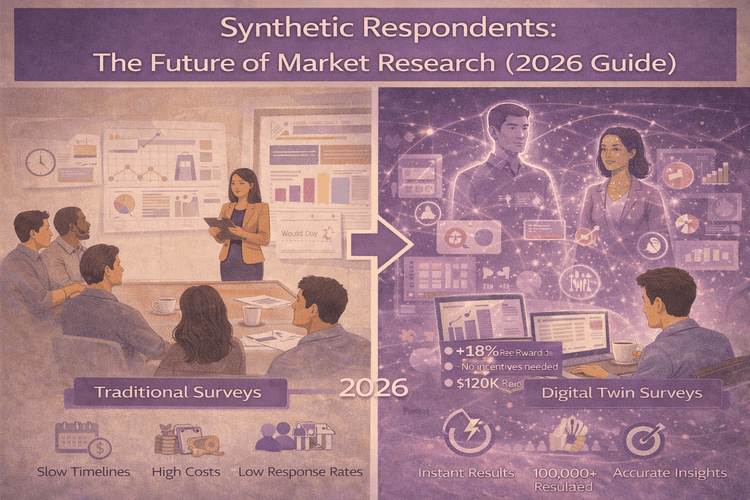

AI Simulation: A Smarter Way to Test Concepts

This is where AI simulation platforms like DoppelIQ Atlas completely change the game.

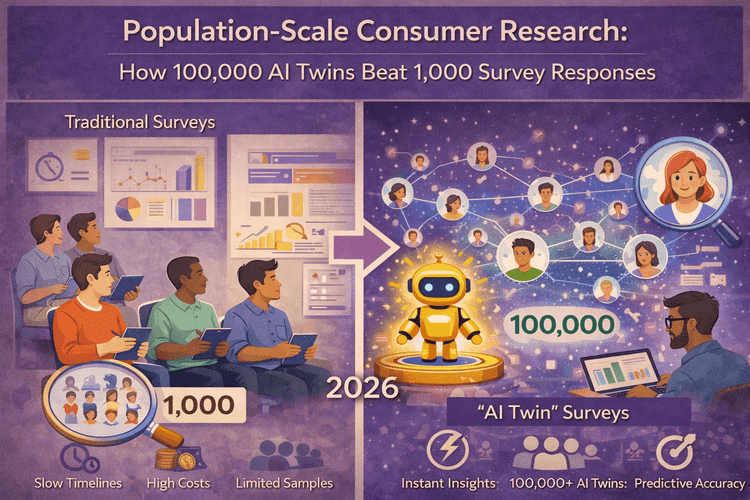

Instead of surveying real people one concept at a time, you're querying a population-scale model of 100,000+ US consumer digital twins. These aren't generic AI personas or made-up stereotypes. They're built from real national survey data, actual behavioral patterns, demographics, and psychographics.

Think of it like this: traditional surveys are like calling 500 random people and asking them questions. AI simulation is like having a model of the entire US consumer population that you can ask anything, anytime, as many times as you want.

Here's how it actually works. You sign up for Atlas (it's free to start), and you immediately get access to those 100,000+ consumer profiles. No data to upload. No technical setup. No waiting.

Then you just ask your question in plain English: "Would young professionals in urban areas pay $12 for a sustainable lunch subscription?"

Within seconds, you get answers broken down by demographics, occupations, regions, whatever segments matter to you. You can instantly ask follow-ups: "What concerns would they have?" or "Which features matter most to them?"

The accuracy? About 91% correlation with real consumer survey outcomes. That's actually comparable to traditional survey reliability, and it's grounded in real behavioral data, not just what people think sounds good.

The Real Power: Test Everything, Learn Fast, Refine Constantly

Here's where things get interesting.

Remember those 10 product concepts? With AI simulation, you can test all of them. This morning. Right now.

You're not limited by budget or time. You can explore the full solution space instead of picking two ideas and hoping for the best.

Let's walk through a real example of how this works:

Monday morning: You've got 15 different messaging angles for your new product. You test all 15 on Atlas across different age groups and income levels. Two hours later, you know which three messages resonate strongest and with whom.

Monday afternoon: You take those top three messages and test variations. Adjust the pricing. Change the benefits order. Test different packaging concepts. By end of day, you've refined your concept based on hundreds of data points.

Tuesday: You test your refined concept against different use cases and buying scenarios. You discover that suburban families love it for different reasons than urban millennials. You spot a potential objection you never considered. You adjust and retest immediately.

Wednesday: You've got a highly optimized concept that's been tested across dozens of variations and segments. Now you can run a traditional survey on your refined concept if you need stakeholder buy-in or final validation.

This is concept validation at a completely different speed and scale.

The old way forced you to commit to a concept early and hope the survey validated it. The new way lets you explore, learn, and refine until you have something that actually works.

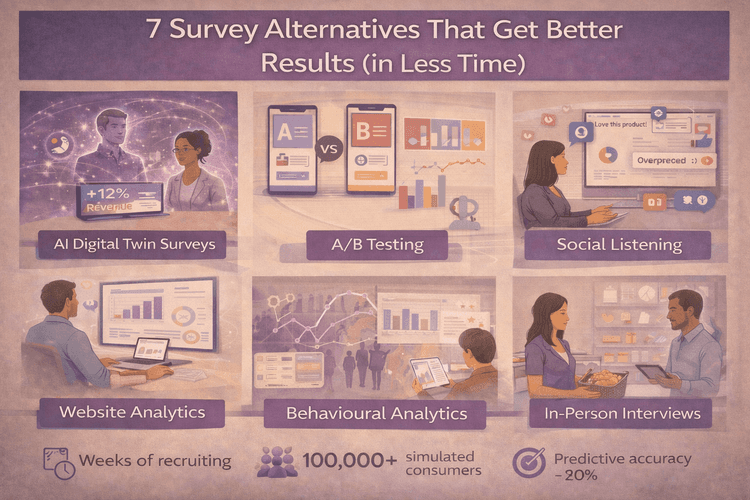

When to Use AI Simulation vs Traditional Surveys

The question isn't "which is better?" It's "which tool for which job?"

Here's a simple framework:

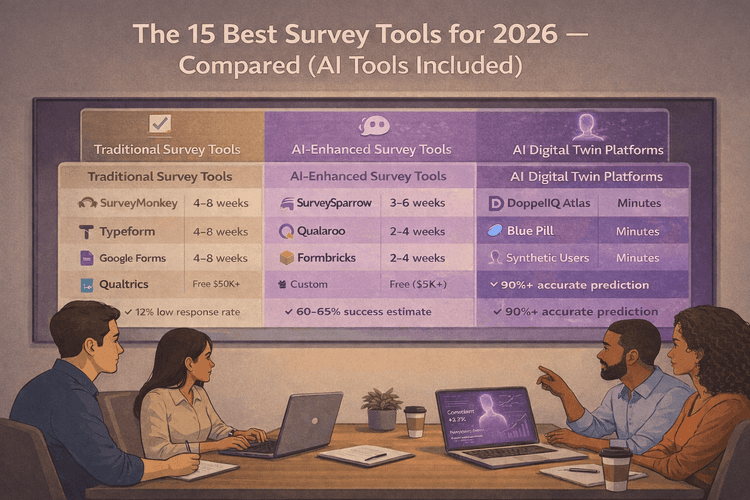

| Use Case | AI Simulation | Traditional Surveys |

|---|---|---|

| Concept Testing | Screening 5+ concept variations | Final validation before major launches |

| Iteration Speed | Rapid iteration and refinement | Slow changes require new fieldwork |

| Research Stage | Early-stage exploration | Late-stage confirmation |

| Audience Coverage | Testing across multiple segments | B2B or highly niche markets |

| Insight Depth | Identifying hidden barriers or biases | Deep emotional or ethnographic insights |

| Budget Efficiency | Quick sanity checks before big research spend | Annual brand tracking studies |

The smartest approach? Use both strategically.

Start with AI simulation to explore your full range of ideas. Test 10, 20, even 50 variations. Learn what works and why. Refine your concepts based on real behavioral patterns. Narrow down to your top performers.

Then use traditional surveys to validate those refined concepts with real respondents. This gives you the confidence and stakeholder buy-in you need while ensuring you're testing the actual best concepts, not just the first ones you thought of.

You'll end up with better concepts, more learning, and often lower total costs because you're not wasting survey budget on concepts that would have failed anyway.

For more on alternatives to traditional surveys, check out our comprehensive comparison of consumer research solutions.

Getting Started With AI-Powered Concept Testing

The best part? You don't need a data science team, customer data to upload, or months of setup.

Here's how to start:

-

Run a parallel test: Take your next planned concept test and run it on DoppelIQ Atlas first. See what you learn. Compare it to your assumptions.

-

Start with quick wins: Test message variations, feature priorities, or concept rankings. These are easy questions that prove value fast.

-

Build the habit: Use simulation for your regular "should we test this?" questions. The ones that don't quite justify a full survey but would benefit from data.

You don't need to replace all your research. Just add a fast, exploratory layer that helps you test smarter, not harder.

Learn more about synthetic respondents and population-scale consumer research to understand how this technology is reshaping market research.

The Bottom Line: Stop Testing in the Dark

Traditional concept testing made sense when it was the only option. But forcing teams to test just one or two ideas because that's all the budget allows? That's not strategy. That's constraint.

AI simulation removes that constraint. It lets you explore the full possibility space, learn what actually resonates with consumers, and refine your concepts before committing real money.

The future of concept testing isn't surveys versus simulation. It's using the right tool at the right stage. Simulation for exploration and iteration. Surveys for validation and confidence.

Your best product idea might be the one you couldn't afford to test before. Now you can.

Frequently Asked Questions

What is concept testing?

Concept testing is checking if people will actually like and buy your product idea before you build it. It helps you avoid launching things nobody wants.

How accurate is AI simulation compared to real surveys?

DoppelIQ Atlas shows about 91% correlation with real consumer surveys, which is comparable to traditional survey reliability and often better than biased survey responses.

Do I need to provide customer data to use AI simulation?

No. DoppelIQ Atlas is pre-built with 100,000+ US consumer profiles. You just sign up and start asking questions immediately.

Can AI simulation replace all my market research?

Not entirely. It's best for exploration, screening, and iteration. You'll still want traditional research for final validation and when stakeholders need proof from real respondents.

How long does it take to get results?

Instantly. Ask your question and get responses in seconds, not weeks. You can test and refine concepts in the same afternoon.

What types of questions can I ask?

Anything you'd ask in regular market research: purchase intent, feature preferences, pricing reactions, segment differences, barriers to adoption, and more.

Is this just for big companies with big budgets?

Actually, it's perfect for teams with limited budgets. You get enterprise-level insights without enterprise-level costs. Start free and scale as needed.

Ready to Test Your Full Range of Product Ideas?

Stop limiting yourself to testing just one or two concepts. With DoppelIQ Atlas, you can explore all your ideas, learn what works, and refine your concepts before spending a dollar on development or advertising.

Start testing for free today and see what you've been missing.

Related Articles

How beauty brands use digital customer twins to get insights and win in a competitive segment

Ankur Mandal

Ankur MandalCustomer digital twins vs focus groups vs ChatGPT: Concept testing in market research

Ankur Mandal

Ankur MandalPopulation-Scale Consumer Research: How 100,000 AI Twins Beat 1,000 Survey Responses

Nehan Mumtaz

Nehan MumtazDigital Twin Accuracy: Can AI Really Predict Consumer Behavior? (2026 Benchmark Data)

Nehan Mumtaz

Nehan Mumtaz