Customer digital twins vs focus groups vs ChatGPT: Concept testing in market research

Ankur Mandal

Ankur MandalYou've probably sat through the ritual. Eight people around a table, lukewarm coffee, one-way mirror. A moderator asks leading questions while you watch from the other side, hoping these strangers represent your millions of customers.

Focus groups have been the backbone of concept testing for decades. They give you rich qualitative feedback, capture group dynamics, and help you discover unexpected insights. But they're also expensive, slow, and often wrong about what people actually do.

But here's the problem with focus groups - 8 people can't speak for millions of customers, one loud person can change everyone's mind, and what people say they'll do isn't what they actually do. People say they want healthy snacks, then buy chips. They claim price doesn't matter, then choose the cheapest option.

Not just that, by the time you get results from weeks of focus group research, your competitor has already launched.

Enter customer digital twins: It ensures you are chatting with thousands of customer cohorts in real-time.

What is a customer digital twin?

A customer digital twin is a virtual simulation of how your real customers think and behave — built using first-party data, behavioral signals, and predictive models. It allows you to test how different customer segments will react to your campaigns, pricing strategies, product features, or marketing creatives—before launching in the real world.

Think of it as the difference between judging a movie by focus group reactions and having Netflix's algorithm that predicts viewing behavior based on millions of actual watching patterns.

Market research process compared: decision process with focus groups vs digital twins

Traditional market research depends on focus groups and usability testing. This creates a bottleneck that slows down everything and costs a fortune.

The old way: focus groups and usability testing

Here's how most companies test new ideas:

- Start with big research studies (6-12 weeks, $50,000-$200,000)

- Hold internal strategy meetings (2-4 weeks of discussions)

- Run focus groups for each idea ($50,000-$500,000 per test, 2-7 days each)

- Schedule usability testing sessions (more time, more money)

- Make gut decisions on ideas you couldn't afford to test

- Launch and hope it works (wait 2-4 weeks to see results)

- If it fails, start over with more focus groups

The problem? Most daily decisions never get tested because focus groups are too expensive and slow. Companies end up guessing.

The new way: digital twins replace focus groups

Digital twins change everything after step 2:

- Same initial research (6-12 weeks, $50,000-$200,000)

- Same strategy meetings (2-4 weeks)

- Set up digital twins (1-2 weeks, one-time cost)

- Test unlimited ideas instantly (no focus groups needed)

- Get results in minutes (not days or weeks)

- Launch with confidence (already tested everything)

Keep improving (test new ideas same day)

Want to test 50 headlines? Done in minutes without recruiting anyone. Need to see customer reactions to pricing? Instant results without scheduling sessions.

Where focus groups are used and how digital twins improve it

1. Product concept testing

How focus groups are used: Focus groups are used for early-stage validation of new product ideas, features, and packaging to understand initial consumer reactions and preferences. However, the limited sample sizes can miss important segments, and opinions may not reflect broader market preferences or actual purchasing decisions.

How digital twins improve it: Digital twins simulate reactions from thousands of customer segments based on actual purchase history, demographics, and behavioral patterns. This is all built on the internal data that you already have. See how frequent buyers respond differently than occasional customers, or how price-sensitive segments react to premium features.

Build digital twins for your top 20% of customers before expanding to broader audiences. Their behavior patterns are typically more predictable and valuable to model.

2. Creative testing

How focus groups are used: Focus groups are interviewed to test of campaigns, taglines, messaging, and visuals to gauge emotional response and message clarity. However, group dynamics can influence individual opinions, reactions in a controlled setting may differ from real-world exposure, and the session's success depends heavily on the moderator's skill in facilitating unbiased discussions.

How digital twins improve it: Once setup, you can test dozens of creative variations across precise segments instantly, everyday. See which headlines drive clicks, which images increase conversion, which calls-to-action generate sales.

3. Brand perception testing

Focus groups provide understanding of brand associations, values, and emotional drivers to guide positioning and messaging strategies. However, brand perception discussions are inherently subjective, can be difficult to translate into actionable business metrics, and heavily depend on the moderator's ability to probe deeper insights without leading responses.

How digital twins improve it: Use customer data to track how people's feelings about your brand change over time and predict whether those feelings will lead to actual purchases.

Monitor metrics like time spent on product pages, willingness to pay higher prices, and cross-sell acceptance rates as indicators of brand strength.

4. Customer journey testing

Focus groups provide identification of pain points in user experience flows and customer journeys through guided discussions and feedback sessions. However, people often struggle to accurately recall their experiences or may describe ideal scenarios rather than typical behavior patterns.

How digital twins improve it: Simulate complete customer journeys across all touchpoints. Model sign-up flows, identify churn triggers, optimize conversion pathways with real behavioral data.

Use digital twins to test simple improvements at these friction points before investing in major redesigns.

Focus groups vs customer digital twins: head-to-head comparison

The difference between focus groups and customer digital twins can be best understood with the example of developing film in a darkroom and taking digital photos. Both capture images, but one lets you see results immediately and take unlimited shots.

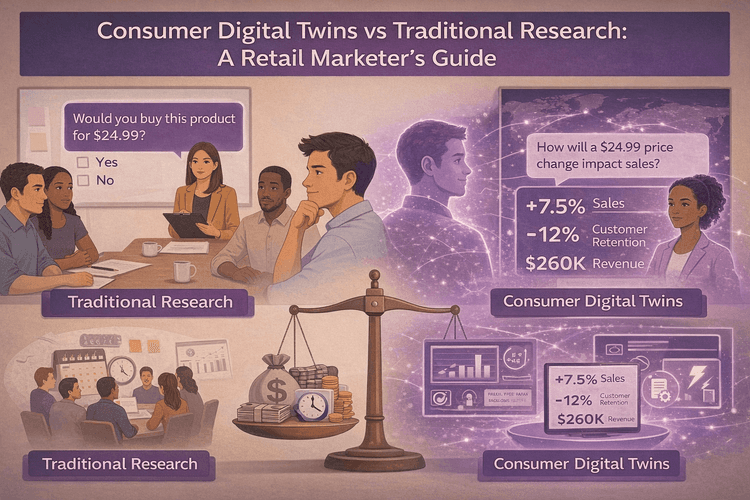

| What matters | Focus groups | Customer digital twins |

|---|---|---|

| Speed | Weeks to months | Minutes to hours |

| Cost per test | $5,000–$15,000 | Near zero after setup |

| Sample size | 8–12 people | Thousands of customer segments |

| Accuracy | Stated opinions (biased) | Behavioral predictions |

| Scalability | Each variation needs new groups | Unlimited parallel testing |

| Repeatability | Expensive to re-run | Test constantly |

Digital twins vs ChatGPT for concept testing

Many companies wonder if they can simply use ChatGPT or other AI tools for concept testing instead of investing in specialized digital twin technology. We recently ran studies to compare the results we got from human responses (focus group), GPT-4o and GPT-5, and DoppelIQ (our digital twin platform) - Link to the report. We surveyed consumers based on realistic scenarios around sustainable household products. We invited users from Linkedin to be our focus group. Responses from them to our set of questions served as the baseline for our comparative report. This report reveals why systems built for customer insights outperform general AI for predicting behavior.

The accuracy gap

When baselined against the focus group response, digital twins were a clear winner. While the DoppelIQ digital twins: showcased 83% accuracy, GPT-4o: demonstrated 69% accuracy and GPT-5: plateaued at 48% accuracy. This is because, ChatGPT models are built for reasoning, while DoppelIQ's digital twins are built to derive customer insights.

The interpretation problem

The bigger issue isn't just accuracy - it's how these systems interpret customer opinions. Most AI tools amplify extremes, while digital twins find the consensus that actually drives adoption.

Our survey showed ChatGPT achieved 45-70% accuracy in predicting customer choices. But when we looked deeper at how ChatGPT distributed responses across different options, we discovered a major problem: the pattern was completely wrong. While ChatGPT got some individual predictions right, it consistently misread which responses would be most common. This meant the overall market picture it painted was highly misleading.

This matters because data interpretation, not enthusiasm, drives real adoption curves. Products succeed when the practical middle adopts them, not just when early enthusiasts love them.

Scale and consistency

When testing across 500+ customer personas, general AI models like ChatGPT showed instability in maintaining representative distributions. DoppelIQ, a digital twin platform maintained consistency because it's specifically built for structured survey synthesis across millions of personas.

Think of it this way: ChatGPT is like a brilliant generalist who can discuss anything but specializes in nothing. Digital twins are like hiring a specialist who only does customer behavior prediction but does it better than anyone else.

Why general AI (ChatGPT) struggles with concept testing

Language-first design: ChatGPT was built to generate convincing text, not predict real behavior. It optimizes for what sounds good, not what people actually do.

Training bias: General AI models are trained on text that over-represents vocal opinions and extreme positions. Real markets are dominated by quiet, practical decision-makers.

No behavioral foundation: ChatGPT lacks the behavioral data and decision pathway modeling that digital twins use to mirror real customer thinking.

Real users on Reddit have shared their experiences researching with ChatGPT, and the results mirror what we found in our research. Multiple discussion threads reveal similar frustrations and insights about using general AI for concept validation. Here’s a summary of what the Reddit threads cover -

| Insight Category | Summary |

|---|---|

| Human authenticity matters | ChatGPT lacks emotional nuance and real-user authenticity |

| Far from actual behaviour (Accuracy challenges) | It may reflect typical responses, but isn’t grounded in actual behavior |

| Helpful for ideation, not validation | Use to generate ideas or simulate reactions, but always validate with real people |

Frequently asked questions

Are digital twins more accurate than traditional research methods?

Digital twins predict actual behavior rather than stated intentions. They're built on what customers do, not what they say they'll do. For behavioral predictions, they consistently outperform traditional methods with 83% accuracy rates compared to 45-60% for focus groups. (Link to the report)

How fast can you get results from digital twins?

Minutes to hours instead of weeks to months. Run multiple scenarios simultaneously and get instant feedback on concept variations. Most DoppelIQ simulations complete in under 30 minutes.

What data do you need to build customer digital twins?

First-party customer data works best: purchase history, website behavior, demographic information, and engagement patterns. The more behavioral data, the more accurate the predictions. Minimum viable dataset: 6 months of customer transactions and basic demographics.

Can digital twins capture emotional responses?

Yes, by analyzing behavioral patterns that correlate with emotional states. While they can't replicate the nuanced emotional discussions of focus groups, they can predict which concepts drive emotional engagement based on past behavioral responses to similar stimuli.

The future belongs to predictive testing

Market research is shifting from asking people what they think to predicting what they'll do. Customer digital twins enable faster, cheaper, and more accurate decision-making across product development, advertising, and brand strategy.

Companies that adopt digital twin testing will reduce wasted marketing spend and bring winning concepts to market while competitors are still scheduling focus groups.

The question isn't whether digital twins will replace traditional research methods. It's whether you'll adapt to them before your competition does.

Ready to transform your concept testing? DoppelIQ's customer digital twins eliminate the guesswork from product launches and marketing campaigns. Get predictive insights in minutes, not months. Contact us to see how behavioral prediction can revolutionize your research process.