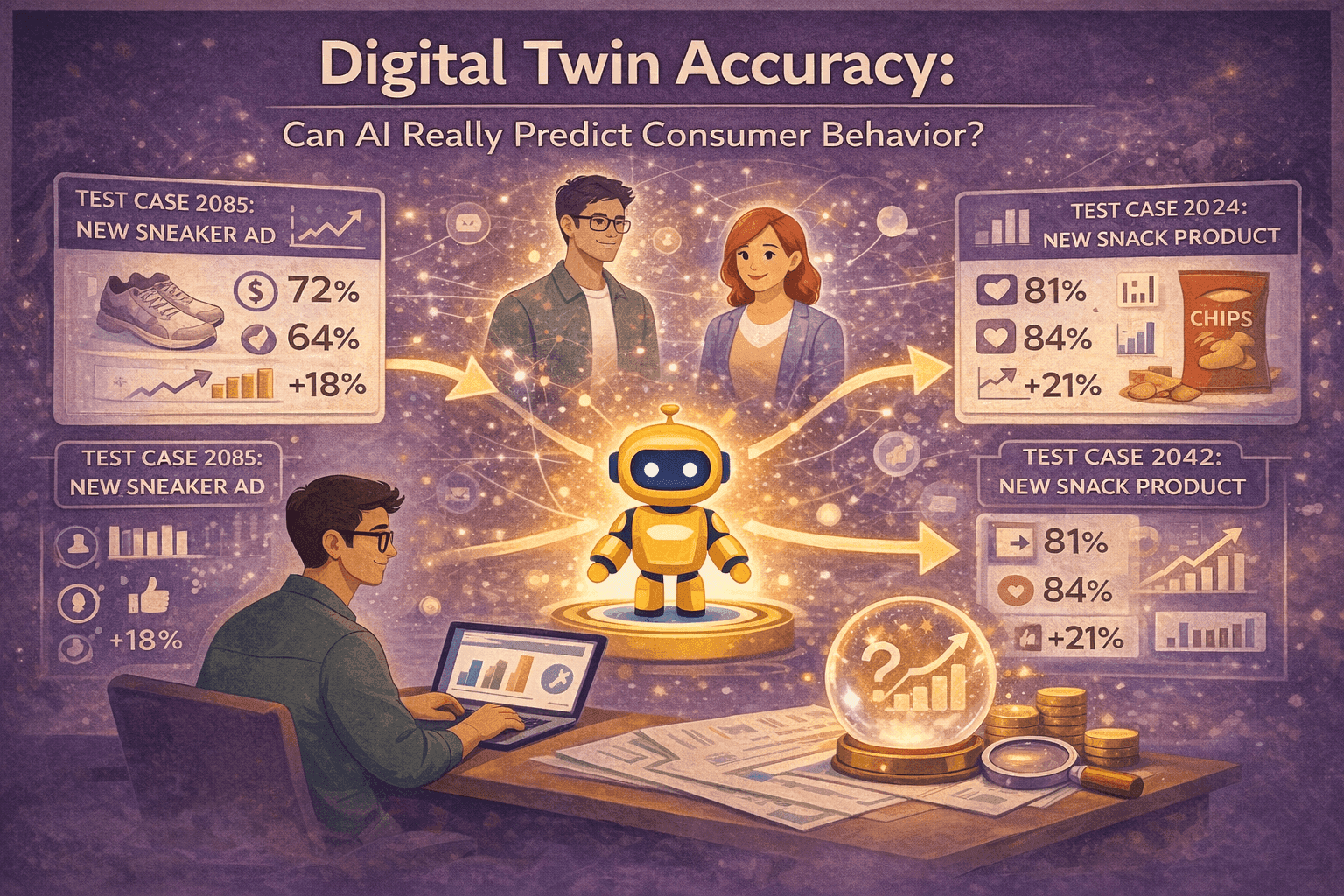

Digital Twin Accuracy: Can AI Really Predict Consumer Behavior? (2026 Benchmark Data)

Nehan Mumtaz

Nehan MumtazThink of traditional market research like trying to predict the weather by asking people what they think tomorrow will be like. Sure, some folks might guess right, but you're better off checking a weather app that uses actual data, right?

That's kind of where we are with AI and consumer behavior prediction in 2026. The question isn't whether AI can predict what shoppers will do. It's whether it can do it accurately enough to actually trust those predictions when you're about to spend real marketing dollars.

Let's cut through the hype and look at what the numbers actually say.

Where AI Predictions Failed Before (And Why That Matters)

Here's the thing about early AI consumer research tools: they were really good at sounding smart. Like that coworker who always has an opinion but never backs it up with data.

The biggest problem? These tools weren't trained to reflect real people. They were trained to sound convincing. There's a huge difference.

Research from 2025 showed that many AI models fell into what experts call the "average trap." They'd give you responses that sounded reasonable but were actually just bland, middle-of-the-road answers that didn't match how real consumers actually think and shop.

Want a scary example? An insurance company's AI system had a 90% error rate on appeals. Nine out of ten denials got overturned when real humans looked at them. Why? The AI was optimized for the wrong thing (saving money) instead of accuracy.

The lesson: just because an AI gives you an answer doesn't mean it's the right answer. You need proof it actually works.

What "Accuracy" Actually Means in Consumer Research

Here's where things get interesting. When most people hear "91% accurate," they think it means the AI gets the exact right number 91 times out of 100. But that's not quite how it works in market research.

Let's use a real example. Say you're testing two ad headlines:

• Headline A: "Save 20% Today"

• Headline B: "Join 50,000 Happy Customers"

A real survey might tell you 62% prefer Headline A. An AI digital twin might say 58% prefer Headline A.

Are those numbers exactly the same? Nope. But do they both tell you the same winner? Absolutely. And that's what matters for your business decision.

This is called directional correctness, and it's way more valuable than exact numerical precision. Think about it like this: you don't need your GPS to tell you you're exactly 2.47 miles from Starbucks. You just need to know which direction to turn.

What Different Accuracy Metrics Mean:

| Accuracy Type | What It Means For You |

|---|---|

| Exact Match | Getting the precise percentage (nice to have, rarely necessary) |

| Directional Correctness | Identifying which option wins (this is what you actually need) |

| Correlation | How closely AI predictions track real survey outcomes over many questions |

How Digital Twin Accuracy Actually Works

So what makes digital twins different from those old AI tools that failed?

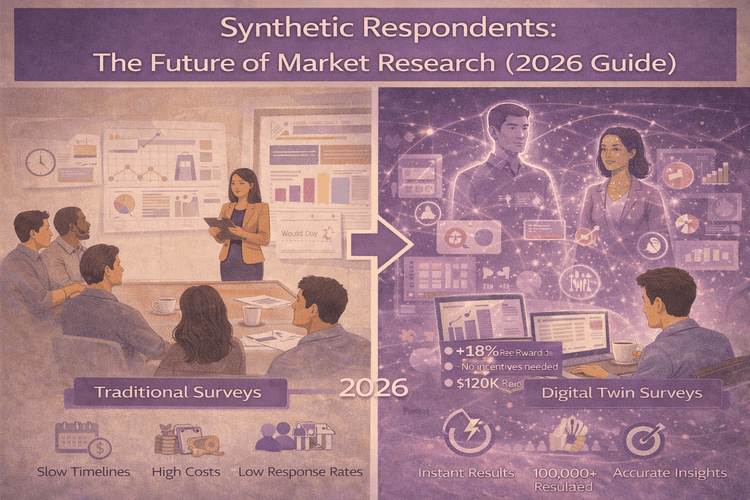

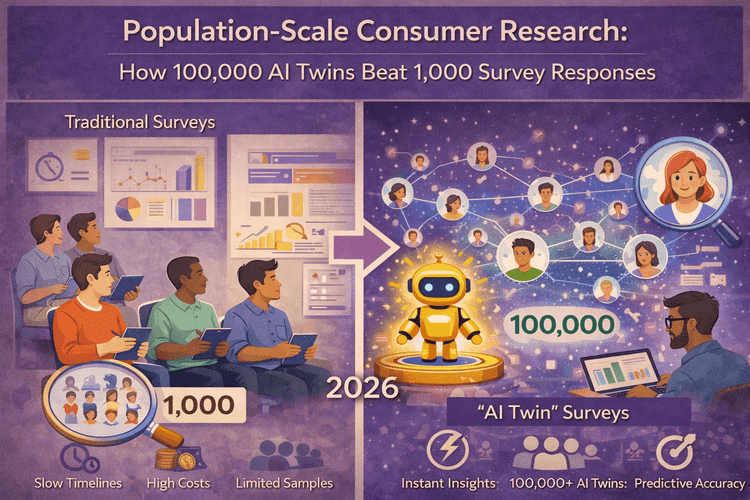

Think of it like this: traditional AI prediction is like trying to guess what your customers will do based on what they did last year. Digital twins are like having 100,000 virtual shoppers you can actually ask.

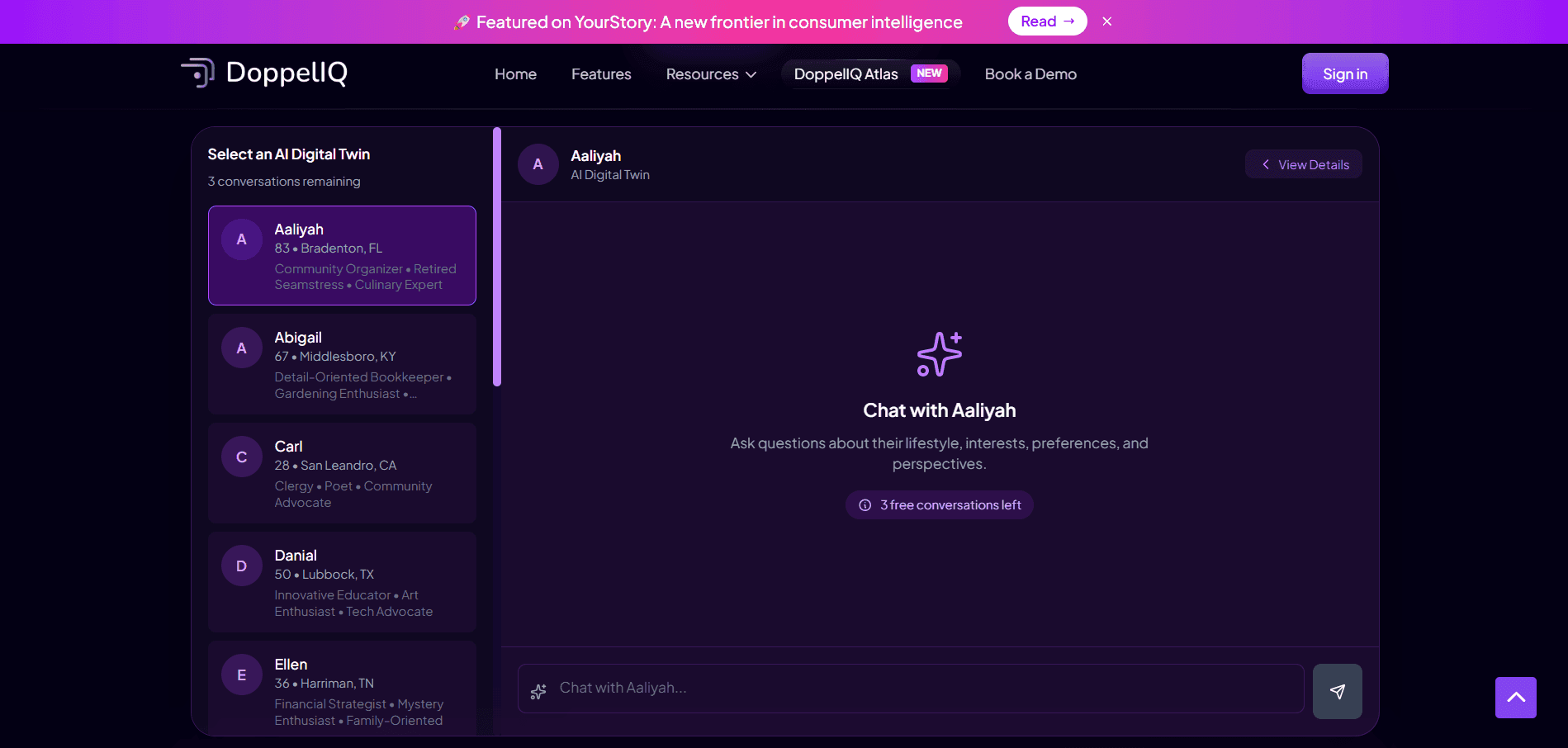

Here's how DoppelIQ Atlas works: instead of one AI making predictions, you get access to 100,000 AI digital twins. Each one represents a real US consumer profile, built from actual survey data, demographics, and lifestyle patterns.

The big difference? You're not asking an AI to predict behavior. You're surveying these digital twins just like you'd survey real people. The advantage is you get answers in minutes instead of waiting weeks for survey responses.

The 91% Correlation Benchmark Explained

Okay, let's talk about that 91% number you keep hearing about.

When DoppelIQ Atlas was tested against real consumer surveys, the results showed 91% correlation. What does that actually mean?

Researchers asked the same questions to both real survey panels and Atlas digital twins. Then they compared the results. Not just one or two questions, but across hundreds of different scenarios, audiences, and topics.

91% correlation means that when you ask Atlas "Which product feature matters most?" or "How do you feel about sustainable packaging?”, the patterns in the answers cohorts line up with what real surveys would tell you 91% of the time.

It doesn't mean every single percentage point matches exactly. It means the insights, preferences, and winners you identify are reliable.

Compare that to traditional survey methods that struggle with low response rates, survey bias, and high costs. The accuracy is comparable, but the speed and scale are totally different.

Why Consistent Signals Beat Perfect Predictions

Here's something most marketers figure out pretty quickly: you don't need perfect data. You need reliable patterns.

Imagine you're testing a new product concept. You run it by 500 real people and 63% say they'd buy it. Great! But what if you tested a different 500 people next week? You might get 59%. Or 67%. That's just normal variation.

What matters isn't the exact number. It's that both groups clearly lean toward "yes, I'd buy this." The directional signal is what drives your decision.

This is where population-scale digital twins actually shine. Instead of asking 500 people (and hoping they're representative), you can query 100,000 digital twins segmented exactly how you need them.

The consistency comes from two things:

• Sample size: With 100,000 profiles, you can drill into specific segments based on demographics, location, education etc. and still have thousands of responses

• Repeatability: Ask the same question today and next week? You'll get the same signal, which helps you track changes over time

When Digital Twins Work Best (And When to Use Real Surveys)

Let's be honest: AI digital twins aren't magic. They're a tool, and like any tool, they work great for some jobs and not so great for others.

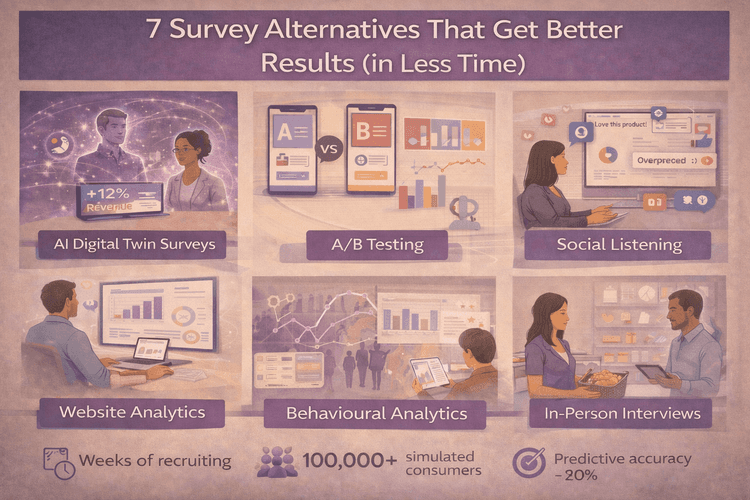

Digital twins excel at:

• Message testing ("Which headline gets more attention?")

• Concept validation ("Would people buy this new product?")

• Market segmentation ("How do different groups react?")

• Quick directional insights before big launches

• Exploring survey alternatives when time or budget is tight

You still want real human validation for:

• Truly novel products in brand-new categories

• Final validation before huge budget decisions

• Emotional or cultural nuance that requires deep conversation

• Markets outside the US (Atlas is US-focused)

The smartest approach? Use digital twins for rapid testing and exploration, then validate your top concepts with real consumers. Think of it like sketching before you paint. You wouldn't skip the sketch, but you also wouldn't present the sketch as the final artwork.

This is part of why synthetic respondents are increasingly seen as a complement to traditional research, not a replacement.

Real-World Applications in 2026

So what are marketing teams actually using digital twins for right now?

One retail brand tested holiday campaign messaging across different regions before committing to ad spend. Instead of waiting three weeks for panel responses, they got directional insights in minutes and adjusted their creative before launch.

A CPG company exploring a new product category used digital twins to map out which consumer segments showed the most interest. They found unexpected enthusiasm from a demographic they hadn't considered, which shaped their entire go-to-market strategy.

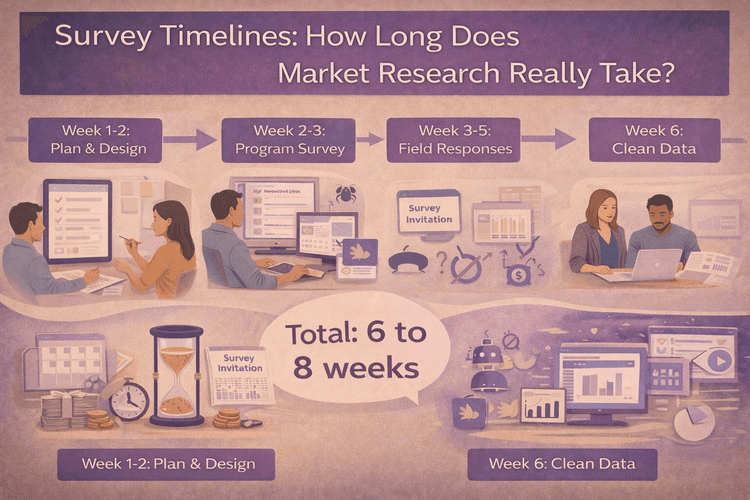

The common thread? Speed and scale. When you can test ten messaging variations across five audience segments in an afternoon, you make better decisions faster. Compare that to traditional consumer research timelines that take weeks or months.

The Bottom Line on Digital Twin Accuracy

Can AI really predict consumer behavior? Based on 2026 benchmark data, the answer is yes, with important caveats.

Digital twins like DoppelIQ Atlas achieve 91% correlation with real survey outcomes. That's not perfect, but it's reliable enough to drive real business decisions, especially for directional insights and rapid testing.

The key is understanding what accuracy means in your context. You're not trying to predict exact percentages. You're trying to identify patterns, preferences, and winners. And for that, digital twins deliver consistent, actionable signals at a scale and speed that traditional research can't match.

The future isn't AI replacing human insight. It's AI helping you make better decisions faster, so you can spend your time and budget validating the ideas that matter most.

Frequently Asked Questions

What does 91% correlation actually mean?

It means digital twin responses match real survey patterns 91% of the time when answering the same questions.

Can digital twins replace real surveys completely?

Not entirely. They're best for rapid testing and directional insights. Validate big decisions with real consumers.

How is this different from AI personas?

AI personas are fictional characters. Digital twins are population-scale models trained on real consumer data with 100,000+ diverse profiles.

Do I need to provide my own data?

No. DoppelIQ Atlas comes pre-built with 100,000 US consumer profiles. You just ask questions and get answers.

How quickly can I get insights?

Minutes instead of weeks. Ask your question in natural language and get results instantly.

Is this only for US markets?

Currently yes. Atlas is built from US consumer data and optimized for American market research.

What types of questions can I ask?

Anything you'd ask in traditional market research: purchase intent, feature preferences, messaging tests, brand perception, and more.

How do you prevent AI bias?

Digital twins are trained on diverse real consumer data. Atlas also helps you map and identify biases rather than claiming to eliminate them.

Try DoppelIQ Atlas for Free

Want to see how digital twin accuracy works for your own research questions? Sign up for DoppelIQ Atlas and get instant access to 100,000 AI consumer digital twins. No credit card required. Ask your market research questions in plain English and get insights in minutes, not weeks.

Learn more about how digital twins transform consumer research or explore our complete guide to consumer research platforms.

Related Articles

How beauty brands use digital customer twins to get insights and win in a competitive segment

Ankur Mandal

Ankur MandalCustomer digital twins vs focus groups vs ChatGPT: Concept testing in market research

Ankur Mandal

Ankur MandalPopulation-Scale Consumer Research: How 100,000 AI Twins Beat 1,000 Survey Responses

Nehan Mumtaz

Nehan MumtazConcept Testing Without Surveys: How AI Simulation Unlocks Your Full Product Potential

Nehan Mumtaz

Nehan Mumtaz