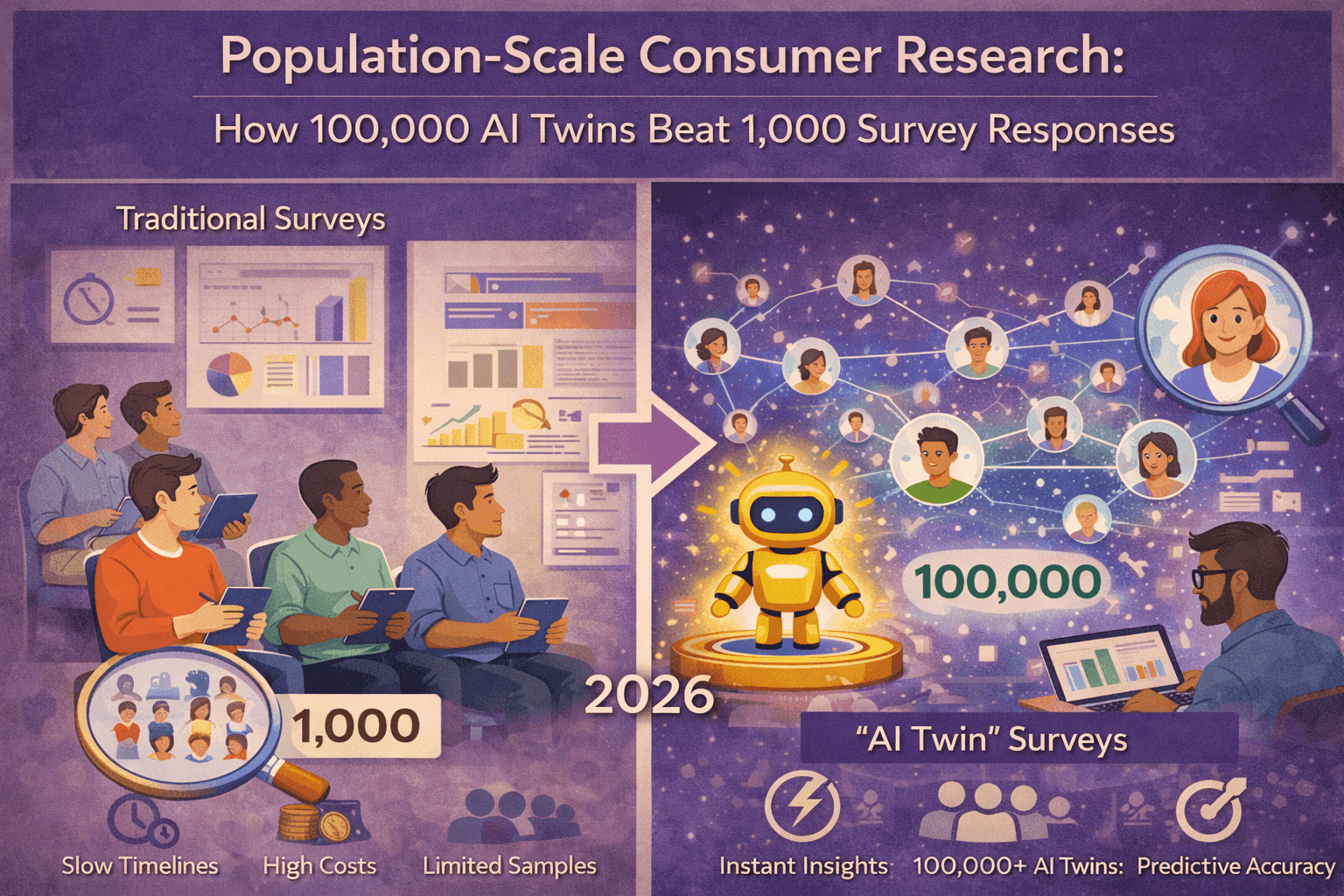

Population-Scale Consumer Research: How 100,000 AI Twins Beat 1,000 Survey Responses

Nehan Mumtaz

Nehan MumtazThink about the last time you tried a new restaurant based on reviews. Would you trust 10 reviews, or 10,000? The answer is obvious.

Yet when it comes to making million-dollar marketing decisions, many companies still rely on feedback from just 300 to 1,000 people.

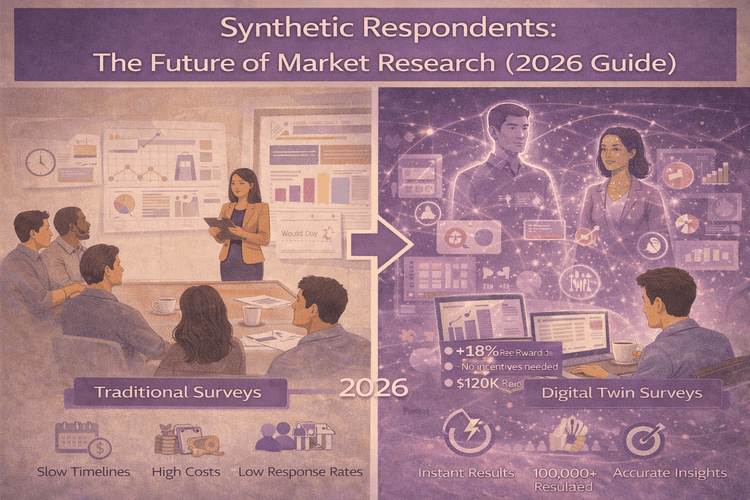

That approach may be familiar, but it comes with clear limitations: high costs, long timelines, and insights that often arrive after key decisions have already been made. Traditional research is still slow and expensive, making it a poor fit for teams that need fast, ongoing understanding of their users.

So why are businesses still working with such small samples? And what does population-scale research actually unlock when it comes to getting better answers, faster?

Why We Got Stuck at 1,000 Respondents

The 300-1,000 respondent "standard" wasn't based on science. It was based on what companies could afford.

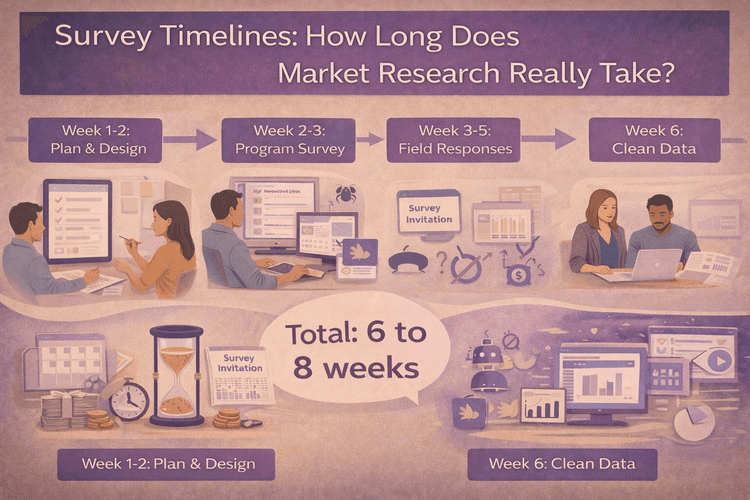

Running a traditional survey is expensive. You need to recruit people, incentivize them to respond, wait for them to complete it, then clean up incomplete or dishonest answers. Survey costs add up fast, which is why most research budgets can only stretch to about 1,000 responses.

Then there's the time problem. Traditional survey timelines can stretch for weeks or even months. You need to design questions, program the survey, recruit participants, wait for responses to trickle in, and then analyze results. By the time you get answers, the market may have already moved on.

And don't forget response rates. If you're lucky, you'll get 20-30% of people to actually complete your survey. That means to get 1,000 responses, you might need to contact 5,000 people.

So we settled for 1,000 respondents not because it's ideal, but because it's what was possible within normal budgets and timelines.

Traditional Surveys vs. Population-Scale Research: The Real Comparison

| Factor | Traditional Survey (1,000 responses) | Population-Scale AI Twins (100,000) |

|---|---|---|

| Time to Insights | 2–8 weeks | Minutes |

| Cost | $10,000–$50,000+ | $99–$500 |

| Response Rate | 20–30% | 100% |

| Geographic Coverage | Limited segments | Complete US coverage |

| Micro-segment Size | 20–50 per group | 1,000s per group |

| Bias Issues | High (fatigue, social desirability) | Minimal (behavior-based) |

| Completion Rate | 60–80% | 100% |

| Edge Case Detection | Poor | Excellent |

What Population-Scale Actually Means

Population-scale research isn't just about having more responses. It's about covering the actual diversity of your market.

Think of it like a map. A survey of 1,000 people is like looking at your state through a keyhole. You can see some buildings, maybe a road or two, but you're missing entire neighborhoods, the countryside, the small towns, and everything in between.

Population-scale research gives you the satellite view. You see everything: cities and rural areas, different age groups and income levels, various professions and lifestyles.

Here's what complete coverage actually looks like:

Geographic diversity: Not just "Northeast vs. Southwest" but the difference between consumers in Portland, Maine versus Portland, Oregon. Between suburban Atlanta and rural Georgia. Between downtown Chicago and small-town Illinois.

Demographic intersections: Instead of broad buckets like "millennials" or "parents," you can understand millennial teachers in the Midwest, or Gen X small business owners in the South, or retired veterans in Florida.

Professional contexts: A nurse has different needs and shopping behaviors than a software engineer, even if they're the same age and income. Traditional surveys lump them together.

Aspirational segments: Some people are climbing the career ladder aggressively. Others prioritize work-life balance. These motivations shape buying decisions more than age or income alone.

When you only survey 1,000 people, you might get 50 responses from rural areas, 30 from retirees, and maybe 15 from luxury buyers. That's not enough to understand how these groups really think and behave.

The Long-Tail Problem: What Small Samples Miss

Here's where things get interesting. Most market research focuses on finding the average customer. But your business doesn't succeed or fail based on averages. It succeeds based on the full range of customer types you serve.

Imagine you're launching a new product. Your survey of 1,000 people shows 70% approval. Sounds great, right? You launch confidently.

Then the product flops in the Southeast. Turns out there's a cultural sensitivity you missed because you only had 80 respondents from that region, and most were from major cities. The rural and small-town shoppers who make up a huge chunk of the market? You barely heard from them.

Or maybe your product does okay overall but completely misses a passionate niche audience that could have been your best advocates. With only 1,000 responses, you had maybe 20 people in that segment. Not enough to spot the opportunity.

This is the long-tail problem. Traditional surveys give you the big picture, but they're blind to the edges where real business opportunities and risks live.

Why Edge Cases Matter More Than You Think

In retail and marketing, edge cases aren't edge cases at all. They're often where your next big win is hiding.

Early adopters and enthusiasts? They're in the edges. The consumers who will become brand evangelists and drive word-of-mouth? Often in smaller, passionate segments. The cultural or regional sensitivities that could tank a campaign? Hiding in the groups you undersampled.

Think about it this way: if you're testing a new campaign and 80% of people react positively, that sounds like a win. But what if the 20% who dislike it are concentrated in a specific demographic that happens to be extremely vocal on social media? Or what if they represent a high-value customer segment?

With 1,000 responses, you might only have 200 people in that "negative" group. Too small to understand what's driving their reaction or how serious the problem really is.

Population-scale research with 100,000 data points means you might have 20,000 people in that segment. Now you can actually understand the problem, how widespread it is, and what to do about it.

How 100,000 AI Digital Twins Work Differently

This is where AI digital twins change the game entirely.

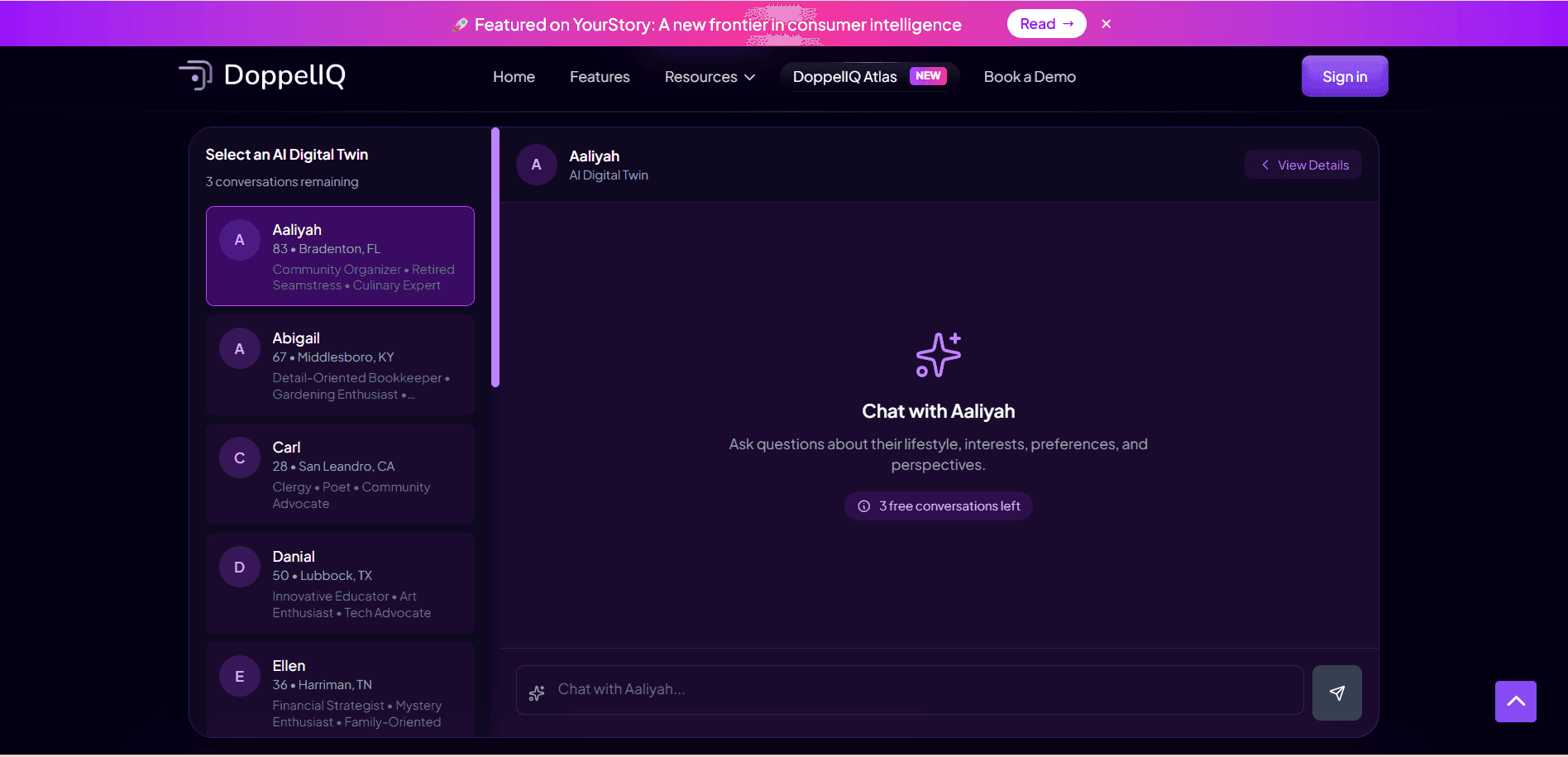

DoppelIQ Atlas isn't 100,000 random AI chatbots. It's a population model built on real US consumer data including national surveys, behavioral patterns, demographics, psychographics, and lifestyle information.

Each digital twin represents an actual consumer profile. Not a made-up persona, but a simulation grounded in how real people actually behave, not just what they say they do.

Here's why this matters: when you survey real people, they often give you the answer they think you want to hear. They misremember their own behavior. They get tired and rush through questions. Survey bias is a massive problem in traditional research.

Digital twins don't have these problems. They're built from actual behavioral data, so they respond based on how consumers actually act, not how they claim to act.

The platform covers the full spectrum of US consumers: every major demographic group, professional category, geographic region, and psychographic segment. And because it's already built, you don't need to recruit anyone or wait for responses.

You just ask your question in plain English, and get answers in minutes. Not weeks. Minutes.

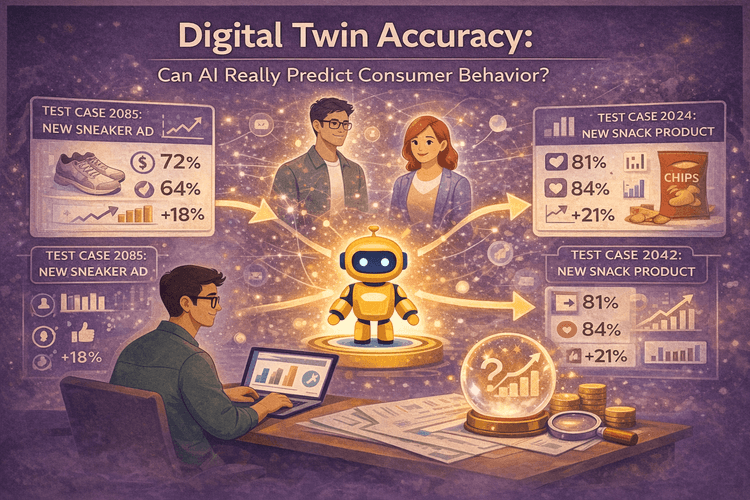

The accuracy? About 91% correlation with real-world consumer survey outcomes. That's remarkably high for a tool that costs a fraction of traditional research and delivers results instantly.

From Hypothesis Testing to True Exploration

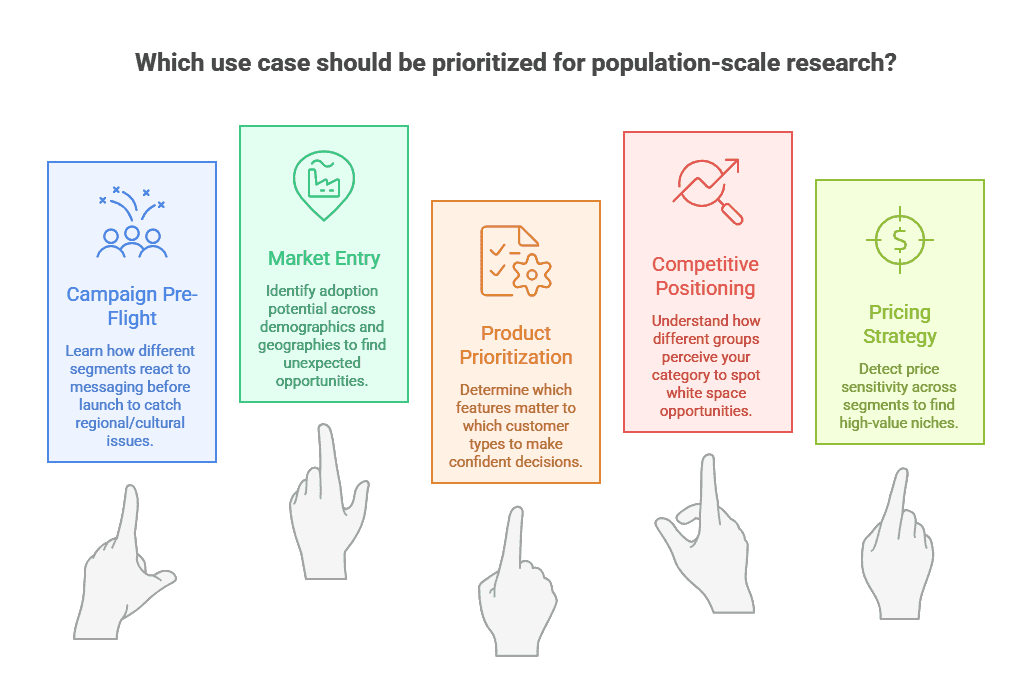

Here's the mindset shift that population-scale research enables.

Traditional surveys are built for testing hypotheses. You have an idea, you create a survey to validate it, you get a yes or no answer.

Population-scale research lets you explore. You can ask open-ended questions and see patterns emerge across dozens of micro-segments simultaneously. You can discover opportunities you didn't know existed.

Want to test five different campaign messages? With traditional research, you'd need to split your 1,000 respondents into groups, meaning each message only gets 200 responses. With 100,000 digital twins, each message gets tested across 20,000 diverse profiles.

Want to understand how different types of professionals respond to your product? With Atlas, you can segment by occupation and still have thousands of responses per category.

Looking for unexpected product-market fit? You can explore how 50 different micro-segments react and find the hidden gem audiences your competitors missed.

This is what modern consumer research solutions should enable: exploration, not just validation.

When to Use What

Let's be clear: AI digital twins aren't the answer to everything. They're one powerful tool in your research toolkit.

Atlas excels at:

-

Testing concepts, messages, and positioning quickly

-

Exploring multiple market segments simultaneously

-

Understanding geographic or demographic variations

-

Pre-validating ideas before expensive launches

-

Getting directional insights fast and affordably

Traditional human surveys still have their place for:

-

Deeply emotional or personal topics

-

Brand-new product categories with no precedent

-

Situations where you need direct quotes and stories

-

Final validation before major launches

The smartest approach? Use Atlas for broad exploration and rapid iteration, then follow up with targeted human research for depth where you need it. You'll make better decisions, faster, and spend your research budget where it matters most.

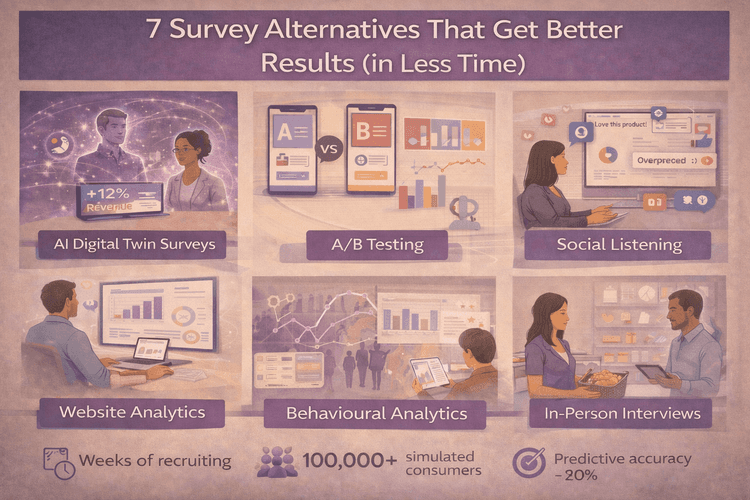

Many teams are also exploring survey alternatives that complement traditional methods rather than replacing them entirely.

The Bottom Line

We've been living with an artificial constraint for decades: the idea that 300-1,000 responses is "enough" for market research. It was never enough. It was just what we could afford.

Now that constraint is gone. Population-scale research gives you complete coverage of your market, reveals long-tail opportunities and risks, and delivers insights in minutes instead of weeks.

The question isn't whether you can afford to upgrade your research approach. It's whether you can afford not to, while your competitors are making faster, better-informed decisions.

Frequently Asked Questions

How accurate are AI digital twins compared to real surveys?

Atlas maintains about 91% correlation with real consumer survey outcomes, often more accurate than traditional surveys where people misremember behavior or give socially desirable answers.

Do I need to provide any customer data to use Atlas?

No. Atlas is pre-built with 100,000+ US consumer profiles already trained on national data. You don't upload anything.

How is this different from creating AI personas?

AI personas are inferred from broad heuristics, not grounded behavioral data. Atlas is a population model grounded in real US consumer data, surveys, and behavioral patterns.

Can marketing teams use this without data scientists?

Yes. You ask questions in plain conversational language like a normal consumer interview. No coding or technical skills required.

How long does it take to get insights?

Minutes. The digital twins are already built, so you type your question and get immediate responses.

What if my industry or product category is very specific?

Atlas covers all US consumer demographics, professions, and geographies. You can ask category-specific questions and segment by relevant characteristics.

When should I still use traditional human surveys?

Use traditional surveys for deeply personal stories, completely new territory with no precedent, or when you need direct quotes for presentations.

How often does Atlas update with new consumer data?

Annually, based on new US consumer survey benchmarks and behavioral data to stay current with trends.

Is there a free trial?

Yes. Sign up free at www.doppeliq.ai and start getting insights immediately with no credit card required.

Ready to Try Population-Scale Research?

Sign up for DoppelIQ Atlas free and get your first insights in minutes. No credit card required, no data science degree needed. Just ask your research questions in plain English and see what 100,000 AI consumer digital twins can tell you.

You'll understand your market better than you ever could with 1,000 survey responses. And you'll wonder why you ever settled for the keyhole view when you could have had the satellite.

Related Articles

How beauty brands use digital customer twins to get insights and win in a competitive segment

Ankur Mandal

Ankur MandalCustomer digital twins vs focus groups vs ChatGPT: Concept testing in market research

Ankur Mandal

Ankur MandalDigital Twin Accuracy: Can AI Really Predict Consumer Behavior? (2026 Benchmark Data)

Nehan Mumtaz

Nehan MumtazConcept Testing Without Surveys: How AI Simulation Unlocks Your Full Product Potential

Nehan Mumtaz

Nehan Mumtaz